June 25, 2025

Stefan Hajnoczi

Profiling tools I use for QEMU storage performance optimization

A fair amount of the development work I do is related to storage performance in QEMU/KVM. Although I have written about disk I/O benchmarking and my performance analysis workflow in the past, I haven't covered the performance tools that I frequently rely on. In this post I'll go over what's in my toolbox and hopefully this will be helpful to others.

Performance analysis is hard when the system is slow but there is no clear bottleneck. If a CPU profile shows that a function is consuming significant amounts of time, then that's a good target for optimizations. On the other hand, if the profile is uniform and each function only consumes a small fraction of time, then it is difficult to gain much by optimizing just one function (although taking function call nesting into account may point towards parent functions that can be optimized):

If you are measuring just one metric, then eventually the profile will become uniform and there isn't much left to optimize. It helps to measure at multiple layers of the system in order to increase the chance of finding bottlenecks.

Here are the tools I like to use when hunting for QEMU storage performance optimizations.

kvm_stat

kvm_stat is a tool that runs on the host and counts events from the kvm.ko kernel module, including device register accesses (MMIO), interrupt injections, and more. These are often associated with vmentry/vmexit events where control passes between the guest and the hypervisor. Each time this happens there is a cost and it is preferrable to minimize the number of vmentry/vmexit events.

kvm_stat will let you identify inefficiencies when guest drivers are accessing devices as well as low-level activity (MSRs, nested page tables, etc) that can be optimized.

Here is output from an I/O intensive workload:

Event Total %Total CurAvg/s kvm_entry 1066255 20.3 43513 kvm_exit 1066266 20.3 43513 kvm_hv_timer_state 954944 18.2 38754 kvm_msr 487702 9.3 19878 kvm_apicv_accept_irq 278926 5.3 11430 kvm_apic_accept_irq 278920 5.3 11430 kvm_vcpu_wakeup 250055 4.8 10128 kvm_pv_tlb_flush 250000 4.8 10123 kvm_msi_set_irq 229439 4.4 9345 kvm_ple_window_update 213309 4.1 8836 kvm_fast_mmio 123433 2.3 5000 kvm_wait_lapic_expire 39855 0.8 1628 kvm_apic_ipi 9614 0.2 457 kvm_apic 9614 0.2 457 kvm_unmap_hva_range 9 0.0 1 kvm_fpu 42 0.0 0 kvm_mmio 28 0.0 0 kvm_userspace_exit 21 0.0 0 vcpu_match_mmio 19 0.0 0 kvm_emulate_insn 19 0.0 0 kvm_pio 2 0.0 0 Total 5258472 214493

Here I'm looking for high CurAvg/s rates. Any counters at 100k/s are well worth investigating.

Important events:

- kvm_entry/kvm_exit: vmentries and vmexits are when the CPU transfers control between guest mode and the hypervisor.

- kvm_msr: Model Specific Register accesses

- kvm_msi_set_irq: Interrupt injections (Message Signalled Interrupts)

- kvm_fast_mmio/kvm_mmio/kvm_pio: Device register accesses

sysstat

sysstat is a venerable suite of performance monitoring tools cover CPU, network, disk, memory activity. It can be used equally within guests and on the host. It is like an extended version of the classic vmstat(8) tool.

The mpstat, pidstat, and iostat tools are the ones I use most often:

- mpstat reports CPU consumption, including %usr (userspace), %sys (kernel), %guest (guest mode), %steal (hypervisor), and more. Use this to find CPUs that are maxed out or poor use of parallelism/multi-threading.

- pidstat reports per-process and per-thread statistics. This is useful for identifying specific threads that are consuming resources.

- iostat reports disk statistics. This is useful for comparing I/O statistics between bare metal and guests.

blktrace

blktrace monitors Linux block I/O activity. This can be used equally within guests and on the host. Often it's interesting to capture traces in both the guest and on the host so they can be compared. If the I/O pattern is different then something in the I/O stack is modifying requests. That can be a sign of a misconfiguration.

The blktrace data can be analyzed and plotted with the btt command. For example, the latencies from driver submission to completion can be summarized to find the overhead compared to bare metal.

perf-top

In its default mode, perf-top is a sampling CPU profiler. It periodically collects the CPU's program counter value so that a profile can be generated showing hot functions. It supports call graph recording with the -g option if you want to aggregate nested function calls and find out which parent functions are responsible for the most CPU usage.

perf-top (and its non-interactive perf-record/perf-report cousin) is good at identifying inner loops of programs, excessive memcpy/memset, expensive locking instructions, instructions with poor cache hit rates, etc. When I use it to profile QEMU it shows the host kernel and QEMU userspace activity. It does not show guest activity.

Here is the output without call graph recording where we can see vmexit activity, QEMU virtqueue processing, and excessive memset at the top of the profile:

3.95% [kvm_intel] [k] vmx_vmexit 3.37% qemu-system-x86_64 [.] virtqueue_split_pop 2.64% libc.so.6 [.] __memset_evex_unaligned_erms 2.50% [kvm_intel] [k] vmx_spec_ctrl_restore_host 1.57% [kernel] [k] native_irq_return_iret 1.13% qemu-system-x86_64 [.] bdrv_co_preadv_part 1.13% [kernel] [k] sync_regs 1.09% [kernel] [k] native_write_msr 1.05% [nvme] [k] nvme_poll_cq

The goal is to find functions or families of functions that consume significant amounts of CPU time so they can be optimized. If the profile is uniform with most functions taking less then 1%, then the bottlenecks are more likely to be found with other tools.

perf-trace

perf-trace is an strace-like tool for monitoring system call activity. For performance monitoring it has a --summary option that shows time spent in system calls and the counts. This is how you can identify system calls that block for a long time or that are called too often.

Here is an example from the host showing a summary of a QEMU IOThread that uses io_uring for disk I/O and ioeventfd/irqfd for VIRTIO device activity:

IO iothread1 (332737), 1763284 events, 99.9%

syscall calls errors total min avg max stddev

(msec) (msec) (msec) (msec) (%)

--------------- -------- ------ -------- --------- --------- --------- ------

io_uring_enter 351680 0 8016.308 0.003 0.023 0.209 0.11%

write 390189 0 1238.501 0.002 0.003 0.098 0.08%

read 121057 0 305.355 0.002 0.003 0.019 0.14%

ppoll 18707 0 62.228 0.002 0.003 0.023 0.32%

Conclusion

We looked at kvm_stat, sysstat, blktrace, perf-top, and perf-trace. They provide performance metrics from different layers of the system. Another worthy mention is the bcc collection of eBPF-based tools that offers a huge array of performance monitoring and troubleshooting tools. Let me know which tools you like to use!

April 23, 2025

QEMU project

QEMU version 10.0.0 released

We’d like to announce the availability of the QEMU 10.0.0 release. This release contains 2800+ commits from 211 authors.

You can grab the tarball from our download page. The full list of changes are available in the changelog.

Highlights include:

- block: virtio-scsi multiqueue support for using different I/O threads to process requests for each queue (similar to the virtio-blk multiqueue support that was added in QEMU 9.2)

- VFIO: improved support for IGD passthrough on all Intel Gen 11/12 devices

- Documentation: significant improvement/overhaul of documentation for QEMU Machine Protocol to make it clearer and more organized, including all commands/events/types now being cross-reference-able via click-able links in generated documentation

- ARM: emulation support for Secure EL2 physical and virtual timers

- ARM: emulation support for FEAT_AFP, FEAT_RPRES, and FEAT_XS architecture features

- ARM: new board models for NPCM8445 Evaluation and i.MX 8M Plus EVK boards

- HPPA: new SeaBIOS-hppa version 18 with lots of fixes and enhancements

- HPPA: translation speed and virtual CPU reset improvements

- HPPA: emulation support for Diva GSP BMC boards

- LoongArch: support for CPU hotplug, paravirtual IPIs, KVM steal time accounting, and virtual ‘extioi’ interrupt routing.

- RISC-V: ISA/extension support for riscv-iommu-sys devices, ‘svukte’, ‘ssstateen’, ‘smrnmi’, ‘smdbltrp’/’ssdbltrp’, ‘supm’/’sspm’, and IOMMU translation tags

- RISC-V: emulation support for Ascalon and RV64 Xiangshan Nanhu CPUs, and Microblaze V boards.

- s390x: add CPU model support for the generation 17 mainframe CPU

- s390x: add support for virtio-mem and for bypassing IOMMU to improve PCI device performance

- x86: CPU model support for Clearwater Forest and Sierra Forest v2

- x86: faster emulation of string instructions

- and lots more…

Thank you to everybody who contributed to this release, whether that was by writing code, reporting bugs, improving documentation, testing, or providing the project with CI resources. We couldn’t do these without you!

KVM on Z

New Release: Ubuntu 25.04

Canonical released a new version of their Ubuntu server offering Ubuntu Server 25.04!

- Exploitation of the AI facility in the Telum II processor

- New Secure Execution and Crypto features

- Network Express support

- Power consumption reporting

See the announcement on the mailing list here, and the blog entry at Canonical with all Z-specific highlights here.

April 09, 2025

KVM on Z

IBM z17 announced!!

Our latest offering in the IBM Z family, IBM z17, was announced yesterday. General availability will be June 18.

See the official announcement, the updated Linux support matrix, and the Technical Guide with all the insights you will need.

March 06, 2025

QEMU project

Announcing QEMU Google Summer of Code 2025 internships

QEMU is participating in Google Summer of Code again this year! Google Summer of Code is an open source internship program that offers paid remote work opportunities for contributing to open source. Internships run May through August, so if you have time and want to experience open source development, read on to find out how you can apply.

Each intern is paired with one or more mentors, experienced QEMU contributors who support them during the internship. Code developed by the intern is submitted through the same open source development process that all QEMU contributions follow. This gives interns experience with contributing to open source software. Some interns then choose to pursue a career in open source software after completing their internship.

Find out if you are eligible

Information on who can apply for Google Summer of Code is here.

Select a project idea

Look through the the list of QEMU project ideas and see if there is something you are interested in working on. Once you have found a project idea you want to apply for, email the mentor for that project idea to ask any questions you may have and discuss the idea further.

Submit your application

You can apply for Google Summer of Code from March 24th to April 8th.

Good luck with your applications!

If you have questions about applying for QEMU GSoC, please email Stefan Hajnoczi or ask on the #qemu-gsoc IRC channel.

December 17, 2024

KVM on Z

Migration fails with something like ..pckmo.. ?

If you run into a situation where migration fails with something like

internal error: QEMU unexpectedly closed the monitor (vm='testguest'):

qemu-kvm: Some features requested in the CPU model are not available in the current configuration: pckmo-aes-256 pckmo-aes-192 pckmo-aes-128 pckmo-etdea-192 pckmo-etdea-128 pckmo-edea msa9_pckmo Consider a different accelerator, QEMU, or kernel version

This indicates that both host are configured differently regarding the CPACF key management Operations

So you can (preferred if the security scheme allows for that) configure both LPARs the same way, de-activate and re-activate the LPAR

or

you can change the CPU model of the guest to no longer have these pckmo functions. Change your guest xml from "host-model" to "host-model with some features disabled".

So shutdown the guest and change the XML from

<cpu mode='host-model' check='partial'/>

to

<feature policy='disable' name='msa3'/>

<feature policy='disable' name='msa9_pckmo'/>

</cpu>

December 11, 2024

QEMU project

QEMU version 9.2.0 released

We’d like to announce the availability of the QEMU 9.2.0 release. This release contains 1700+ commits from 209 authors.

You can grab the tarball from our download page. The full list of changes are available in the changelog.

Highlights include:

- virtio-gpu: support for 3D acceleration of Vulkan applications via Venus Vulkan driver in the guest and virglrenderer host library

- crypto: GLib crypto backend now supports SHA-384 hashes

- migration: QATzip-accelerated compression support while using multiple migration streams

- Rust: experimental support for device models written in Rust (for development use only)

- ARM: emulation support for FEAT_EBF16, FEAT_CMOW architecture features

- ARM: support for two-stage SMMU translation for sbsa-ref and virt boards

- ARM: support for CPU Security Extensions for xilinx-zynq-a9 board

- ARM: 64GB+ memory support when using HVF acceleration on newer Mac systems

- HPPA: SeaBIOS-hppa v17 firmware with various fixes and enhancements

- RISC-V: IOMMU support for virt machine

- RISC-V: support for control flow integrity and Svvptc extensions, and support for Bit-Manipulation extension on OpenTitan boards

- RISC-V: improved performance for vector unit-stride/whole register ld/st instructions

- s390x: support for booting from other devices if the previous ones fail

- x86: support for new nitro-enclave machine type that can emulate AWS Nitro Enclave and can boot from Enclave Image Format files.

- x86: KVM support for enabling AVX10, as well as enabling specific AVX10 versions via command-line

- and lots more…

Thank you to everybody who contributed to this release, whether that was by writing code, reporting bugs, improving documentation, testing, or providing the project with CI resources. We couldn’t do these without you!

October 23, 2024

Thomas Huth

How to use secure RHCOS images on s390x

Recently, I needed to debug a problem that only occurred in RHCOS images that are running in secure execution mode on an IBM Z system. Since I don’t have a OCP installation at hand, I wanted to run such an image directly with QEMU or libvirt. This sounded easy at a first glance, since there are qcow2 images available for RHCOS, but in the end, it was quite tricky to get this working, so I’d like to summarize the steps here, maybe it’s helpful for somebody else, too. Since the “secex” images are encrypted, you cannot play the usual tricks with e.g. guestfs here, you have to go through the ignition process of the image first. Well, maybe there is already the right documentation for this available somewhere and I missed it, but most other documents mainly talk about x86 or normal (unencrypted) images (like the one for Fedora CoreOS on libvirt ), so I think it will be helpful to have this summary here anyway.

Preparation

First, make sure that you have the right tools installed for this task:

sudo dnf install butane wget mkpasswd openssh virt-install qemu-imgSince we are interested in the secure execution image, we have to download the image with “secex” in the name, together with the right GPG key that is required for encrypting the config file later, for example:

wget https://mirror.openshift.com/pub/openshift-v4/s390x/dependencies/rhcos/4.16/4.16.3/rhcos-qemu-secex.s390x.qcow2.gz

wget https://mirror.openshift.com/pub/openshift-v4/s390x/dependencies/rhcos/4.16/4.16.3/rhcos-ignition-secex-key.gpg.pub

Finally, uncompress the image. And since we want to avoid modifying the original image, let’s also create an overlay qcow2 image for it:

gzip -d rhcos-qemu-secex.s390x.qcow2.gz

qemu-img create -f qcow2 -b rhcos-qemu-secex.s390x.qcow2 -F qcow2 rhcos.qcow2Creation of the configuration file

For being able to log in your guest via ssh later, you need an ssh key, so let’s create one and add it to your local ssh-agent:

ssh-keygen -f rhcos-key

ssh-add rhcos-keyIf you also want to log in on the console via password, create a

password hash with the mkpasswd tool, too.

Now create a butane configuration file and save it as “config.bu”:

variant: fcos

version: 1.4.0

passwd:

users:

- name: core

ssh_authorized_keys:

- INSERT_THE_CONTENTS_OF_YOUR_rhcos-key.pub_FILE_HERE

password_hash: INSERT_THE_HASH_FROM_mkpasswd_HERE

groups:

- wheel

storage:

files:

- path: /etc/se-hostkeys/ibm-z-hostkey-1

overwrite: true

contents:

local: HKD.crt

systemd:

units:

- name: serial-getty@.service

mask: false

Make sure to replace the “INSERT_…” markers in the file with the contents

of your rhcos-key.pub and the hash from mkpasswd, and also

make sure to have the host key document (required for encrypting the

guest with genprotimg) available as HKD.crt in the current directory.

Next, the butane config file needs to be converted into an ignition file, which then needs to be encrypted with the GPG key of the RHCOS image:

butane -d . config.bu > config.ign

gpg --recipient-file rhcos-ignition-secex-key.gpg.pub --yes \

--output config.crypted --armor --encrypt config.ignIgnition of the guest image

The encrypted config file can now be used to start the ignition of the guest. On s390x, the config file is not presented via the “fw_cfg” mechanism to the guest (like it is done on x86), but with a drive that has a special serial number. Thus QEMU should be started like this:

/usr/libexec/qemu-kvm -d guest_errors -accel kvm -m 4G -smp 4 -nographic \

-object s390-pv-guest,id=pv0 -machine confidential-guest-support=pv0 \

-drive if=none,id=dr1,file=rhcos.qcow2,auto-read-only=off,cache=unsafe \

-device virtio-blk,drive=dr1 -netdev user,id=n1,hostfwd=tcp::2222-:22 \

-device virtio-net-ccw,netdev=n1 \

-drive if=none,id=drv_cfg,format=raw,file=config.crypted,readonly=on \

-device virtio-blk,serial=ignition_crypted,iommu_platform=on,drive=drv_cfgThis should start the ignition process during the first boot of the guest.

During future boots of the guest, you don’t have to specify the drive with

the “config.crypted” file anymore.

Once the ignition is done, you can log in to the guest either on the

console with the password that you created with mkpasswd, or via ssh:

ssh -p 2222 core@localhostNow you should be able to use the image. But keep in mind that this is an

rpm-ostree based image, so for installing additional packages, you have to

use rpm-ostree install instead of dnf install here. And the kernel

can be replaced like this, for example:

sudo rpm-ostree override replace \

kernel-5.14.0-...s390x.rpm \

kernel-core-5.14.0-...s390x.rpm \

kernel-modules-5.14.0-...s390x.rpm \

kernel-modules-core-5.14.0-...s390x.rpm \

kernel-modules-extra-5.14.0-...s390x.rpmThat’s it! Now you can enjoy your configured secure-execution RHCOS image!

Special thanks to Nikita D. for helping me understanding the ignition process of the secure execution images.

October 01, 2024

Stefan Hajnoczi

Video and slides available for "IOThread Virtqueue Mapping" talk at KVM Forum 2024

My KVM Forum 2024 talk "IOThread Virtqueue Mapping: Improving virtio-blk SMP scalability in QEMU" is now available on YouTube. The slides are also available here.

IOThread Virtqueue Mapping is a new QEMU feature for configuring multiple IOThreads that will handle a virtio-blk device's virtqueues. This means QEMU can take advantage of the host's Linux multi-queue block layer and assign CPUs to I/O processing. Giving additional resources to virtio-blk emulation allows QEMU to achieve higher IOPS and saturate fast local NVMe drives. This is especially important for applications that submit I/O on many vCPUs simultaneously - a workload that QEMU had trouble keeping up with in the past.

You can read more about IOThread Virtqueue Mapping in this Red Hat blog post.

September 03, 2024

QEMU project

QEMU version 9.1.0 released

We’d like to announce the availability of the QEMU 9.1.0 release. This release contains 2800+ commits from 263 authors.

You can grab the tarball from our download page. The full list of changes are available in the changelog.

Highlights include:

- migration: compression offload support via Intel In-Memory Analytics Accelerator (IAA) or User Space Accelerator Development Kit (UADK), along with enhanced support for postcopy failure recovery

- virtio: support for VIRTIO_F_NOTIFICATION_DATA, allowing guest drivers to provide additional data as part of sending device notifications for performance/debug purposes

- guest-agent: support for guest-network-get-route command on linux, guest-ssh-* commands on Windows, and enhanced CLI support for configuring allowed/blocked commands

- block: security fixes for QEMU NBD server and NBD TLS encryption

- ARM: emulation support for FEAT_NMI, FEAT_CSV2_3, FEAT_ETS2, FEAT_Spec_FPACC, FEAT_WFxT, FEAT_Debugv8p8 architecture features

- ARM: nested/two-stage page table support for emulated SMMUv3

- ARM: xilinx_zynq board support for cache controller and multiple CPUs, and B-L475E-IOT01A board support for a DM163 display

- LoongArch: support for directly booting an ELF kernel and for running up to 256 vCPUs via extioi virt extension

- LoongArch: enhanced debug/GDB support

- RISC-V: support for version 1.13 of privileged architecture specification

- RISC-V: support for Zve32x, Zve64x, Zimop, Zcmop, Zama16b, Zabha, Zawrs, and Smcntrpmf extensions

- RISC-V: enhanced debug/GDB support and general fixes

- SPARC: emulation support for FMAF, IMA, VIS3, and VIS4 architecture features

- x86: KVM support for running AMD SEV-SNP guests

- x86: CPU emulation support for Icelake-Server-v7, SapphireRapids-v3, and SierraForest

- and lots more…

Thank you to everybody who contributed to this release, whether that was by writing code, reporting bugs, improving documentation, testing, or providing the project with CI resources. We couldn’t do these without you!

August 09, 2024

KVM on Z

New Feature: Installation Assistant for Linux on IBM Z

Ever struggled to create configuration files for starting Linux on IBM Z and LinuxONE installations? Fear no more, we got you covered now: A new assistant available online will help you create parameter files!

Writing parameter files can be a challenge, with bugs triggering cycles with lengthy turnaround times. Our new installation assistant generates installer parameter files by walking you through a step-by-step process, where you answer simple questions to generate a parameter file. Comes with contextual help in every stage, so you can follow along what is happening!

Currently supports OSA and PCI networking devices, IPv4/v6, and VLAN installations.

Currently supports RHEL 9 and SLES 15 SP5 or later.

Access the assistant at https://ibm.github.io/liz/

July 08, 2024

Gerd Hoffmann

modern uefi network booting

Network boot kickoff.

Step number one for the firmware on any system is sending out a DHCP request, asking the DHCP server for an IP address, the boot server (called "next server" in dhcp terms) and the bootfile.

On success the firmware will contact the boot server, fetch the bootfile and hand over control to the bootfile. Traditional method to serve the bootfile is using tftp (trivial file transfer protocol). Modern systems support http too. I have an article on setting up the dhcp server for virtual machines you might want check out.

What the bootfile is expected to be depends on the system being booted. There are embedded systems -- for example IP phones -- which load the complete system software that way.

When booting UEFI systems the bootfile typically is an EFI binary. That is not the only option though, more on that below.

UEFI network boot with a boot loader.

The traditional way to netboot linux on UEFI systems is using a

bootloader. The bootfile location handed out by the DHCP server

points to the bootloader and is the first file loaded over the

network. Typical choices for the bootloader

are grub.efi, snponly.efi

(from ipxe project)

or syslinux.efi.

Next step is the bootloader fetching the config file. That works the same way the bootloader itself was loaded, using the EFI network driver provided by either the platform firmware (typically the case for onboard NICs) or via PCI option rom (plug-in NICs). The bootloader does not need its own network drivers.

The loaded config file controls how the boot will continue. This can be very simple, three lines asking the bootloader to fetch kernel + initrd from a fixed location, then start the kernel with some command line. This can also be very complex, creating an interactive menu system where the user has dozens of options to choose from (see for example netboot.xyz).

Now the user can -- in case the config file defines menus -- pick what he wants boot.

Final step is the bootloader fetching the kernel and initrd (again using the EFI network driver) and starting the kernel. Voila.

Boot loaders and secure boot.

When using secure boot there is one more intermediate step needed:

The first binary needs to be be shim.efi, which in turn

will download the actual bootloader. Most distros ship

only grub.efi with a secure boot signature, which

limits the boot loader choice to that.

Also all components (shim + grub + kernel) must come from the same

distribution. shim.efi has the distro secure boot

signing certificate embedded, so Fedora shim will only boot

grub + kernel with a secure boot signature from Fedora.

Netbooting machines without EFI network driver.

You probably do not have to worry about this. Shipping systems with

EFI network driver and UEFI network boot support is standard feature

today, snponly.efi should be used for these systems.

When using older hardware network boot support might be missing

though. Should that be the case

the ipxe project can help because it

also features a large collection of firmware network drivers. It

ships an all-in-one EFI binary named ipxe.efi which

includes the the bootloader and scripting features (which are

in snponly.efi too) and additionally all the ipxe

hardware drivers.

That way ipxe.efi can boot from the network even if the

firmware does not provide a driver. In that

case ipxe.efi itself must be loaded from local storage

though. You can download the efi binary and ready-to-use ISO/USB

images from boot.ipxe.org.

UEFI network boot with an UKI.

A UKI (unified kernel image) is an EFI binary bundle. It contains a linux kernel, an initrd, the command line and a few more optional components not covered here in sections of the EFI binary. Also the systemd efi stub, which handles booting the bundled linux kernel with the bundled initrd.

One advantage is that the secure boot signature of an UKI image will cover all components and not only the kernel itself, which is a big step forward for linux boot security.

Another advantage is that a UKI is self-contained. It does not need a bootloader which knows how to boot linux kernels and handle initrd loading. It is simply an EFI binary which you can start any way you want, for example from the EFI shell prompt.

The later makes UKIs interesting for network booting, because they can be used as bootfile too. The DHCP server hands out the UKI location, the UEFI firmware fetches the UKI and starts it. Done.

Combining the bootloader and UKI approaches is possible too. UEFI

bootloaders can load not only linux kernels. EFI binaries

(including UKIs) can be loaded too, in case of grub.efi

with the chainloader command. So if you want

interactive menus to choose an UKI to boot you can do that.

UEFI network boot with an ISO image.

Modern UEFI implementations can netboot ISO images too. Unfortunately there are a few restrictions though:

- It is a relatively new feature. It exists for a few years already in edk2, but with the glacial speeds firmware feature updates are delivered (if at all) this means there is hardware in the wild which does not support this.

- It is only supported for HTTP boot. Which makes sense given that ISO images can be bulky and the http protocol typically is much faster than the tftp protocol used by PXE boot. Nevertheless you might need additional setup steps because of this.

When the UEFI firmware gets an ISO image as bootfile from the DHCP server it will load the image into a ramdisk, register the ramdisk as block device and try to boot from it.

From that point on booting works the same way booting from a local cdrom device works. The firmware will look for the boot loader on the ramdisk and load it. The bootloader will find the other components needed on the ramdisk, i.e. kernel and initrd in case of linux. All without any network access.

The UEFI firmware will also create ACPI tables for a pseudo nvdimm

device. That way the booted linux kernel will find the ramdisk too.

You can use the standard Fedora / CentOS / RHEL netinst ISO image,

linux will find the images/install.img on the ramdisk

and boot up all the way to anaconda. With enough RAM you can even

load the DVD with all packages, then do the complete system install

from ramdisk.

The big advantage of this approach is that the netboot workflow becomes very simliar to other installation workflows. It's not the odd kid on the block any more where loading kernel and initrd works in a completely different way. The very same ISO image can be:

- Burned to a physical cdrom and used to boot a physical machine.

- In many cases the ISO images are hypbrid, so they can be flashed to a USB stick too for booting a physical machine.

- The ISO image can be attached as virtual device to a virtual machine.

- On server grade managed hardware the ISO image can be attached as virtual media using redfish and the BMC.

- And finally: The ISO image can be loaded into a ramdisk via UEFI http boot.

Bonus: secure boot support suddenly isn't a headace any more.

The kernel command line.

There is one problem with the fancy new world though. We have lots of places in the linux world which depend on the linux kernel command line for system configuration. For example anaconda expects getting the URL of the install repository and the kickstart file that way.

When using a boot loader that is simple. The kernel command line simply goes into the boot loader config file.

With ISO images it is more complicated, changing the grub config file on a ISO image is a cumbersome process. Also ISO images are not exactly small, so install images with customized grub.cfg need quite some storage space.

UKIs can pass through command line arguments to the linux kernel, but that is only allowed in case secure boot is disabled. When using UKIs with secure boot the best option is to use the UKIs built and signed on distro build infrastructure. Which implies using the kernel command line for customization is not going to work with secure boot enabled.

So, all of the above (and UKIs in general) will work better if we

can replace the kernel command line as universal configuration

vehicle with something else. Which most likely will not be a single

thing but a number of different approaches depending on the use

case. Some steps into that direction did happen already. Systemd

can autodetect

partitions (so booting from disk without root=...

on the kernel command line works).

And systemd

credentials can be used to configure some aspects of a linux

system. There is still a loooong way to go though.

July 02, 2024

KVM on Z

New Video: Configuring Crypto Express Adapters for KVM Guests

A new video illustrating the steps to perform on a KVM host and in a virtual server configuration to make AP queues of cryptographic adapters available to a KVM guest can be found here.

June 18, 2024

KVM on Z

virtio-blk scalability improvements

QEMU 9.0 and libvirt 10.0 introduced an important improvement for disk (virtio-blk) scalability. Until now, a virtio-blk device could use one iothread in the host (or the QEMU main thread).

For a while it was already possible to specify multiple virtio queues for a single virtio-block device, and the Linux guest driver was able to exploit thse concurrently:

<driver name='qemu' queues='3'>All queues have been handled by one iothread. This introduced a limit for the maximum amount of IO requests per seconds per disk no matter how many queues have been defined.

Now it is possible to assign a queue to a given host iothread allowing for higher throughput per device:

<driver name='qemu' queues='3'>

<iothreads>

<iothread id='2'>

<queue id='1'/>

</iothread>

<iothread id='3'>

<queue id='0'/>

<queue id='2'/>

</iothread>

</iothreads>

</driver>Example with 3 queues to illustrate the possibility to have 2 queues on one iothread and one queue on another. In real life 2 or 4 queues make more sense.

Initial tests showed improved performance and reduced CPU cycles when going from 1 to 2 queues. More performance analysis needs to happen but this looks like a very promising improvement and going from 1 to 2 is almost a no-brainer. Adding more queues continues to improve the performance, but also increases the overall CPU consumption so this needs additional considerations.

Sharing iothreads across multiple disks continues to be possible.

This feature is also being backported into some distributions like RHEL 9.4 or will be available via regular QEMU/libvirt upgrades.

May 23, 2024

KVM on Z

New Release: Ubuntu 24.04

Canonical released a new version of their Ubuntu server offering Ubuntu Server 24.04!

Highlights include

- HSM support for Secure Execution

- Further Crypto enhancements and extensions

See the announcement on the mailing list here, and the blog entry at Canonical with all Z-specific highlights here.

This release is very significant, since it marks a so-called LTS (Long Term Support) release, granting an extended service timeframe of up to 10 years, as illustrated here.May 06, 2024

QEMU project

KVM Forum 2024: Call for presentations

The KVM Forum 2024 conference will take place in Brno, Czech Republic on September 22-23, 2024. KVM Forum brings together the Linux virtualization community, especially around the KVM stack, including QEMU and other virtual machine monitors.

The Call for Presentations is open until June 8, 2024. You are invited to submit presentation proposals via the KVM Forum CfP page. All presentation slots will be 25 minutes + 5 minutes for questions.

Suggested topics include:

- Scalability and Optimization

- Hardening and security

- Confidential computing

- Testing

- KVM and the Linux Kernel

- New Features and Architecture Ports

- Device Passthrough: VFIO, mdev, vDPA

- Network Virtualization

- Virtio and vhost

- Virtual Machine Monitors and Management

- VMM Implementation: APIs, Live Migration, Performance Tuning, etc.

- Multi-process VMMs: vhost-user, vfio-user, QEMU Storage Daemon, SPDK

- QEMU without KVM: Hypervisor.framework, Windows Hypervisor Platform, etc.

- Managing KVM: Libvirt, KubeVirt, Kata Containers

- Emulation

- New Devices, Boards and Architectures

- CPU Emulation and Binary Translation

April 29, 2024

KVM on Z

IBM Secure Execution for Linux support for Crypto Express adapters

IBM Secure Execution for Linux -- the Linux Kernel Virtual Machine (KVM) based Confidential Computing technology for IBM LinuxONE and Linux on IBM Z -- now allows Secure Execution guests leverage secure passthrough access to up to 12 Crypto Express 8S adapter domains in accelerator or EP11 co-processor mode.

Customers who require the highest level of protection (FIPS 140-2 level 4 certified) for their cryptographic keys and thus for their sensitive data can now have their workloads deployed as Secure Execution KVM guests with access to Hardware Security Modules (HSMs) if the provider uses IBM z16 or LinuxONE 4 servers with Crypto Express 8S adapters. This combination provides business value for solutions around key and certificate management, multi-party computation and digital assets. But more use cases arise as confidential computing becomes more common and the need to leverage such highly certified HSM to protect AI models or provide data sovereignty across organizational and infrastructure boundaries grows.

To exploit this new function, IBM z16 or LinuxONE 4 severs with firmware bundles S30 and S31b are needed. To use a Crypto Express 8S adapter in EP11 mode the minimal EP11 firmware version loaded must be version 5.8.30.

IBM is working with Linux distribution partners to include the required Linux support for this function for both the KVM Hypervisor and the Secure Execution guests in future distribution releases. Linux support for this function is already available today with Ubuntu 24.04 (Noble Numbat).

This new capability showcases IBM’s commitment and previously stated direction to foster the use of confidential computing and expand the security value proposition of existing security and crypto solutions as the business needs of our customers and technical possibilities evolve.

For detailed information on how to use Crypto Express support see the Introducing IBM Secure Execution for Linux publication.

Authored by

- Reinhard Bündgen – buendgen@de.ibm.com

- Nicolas Mäding – nmaeding@de.ibm.com

April 23, 2024

QEMU project

QEMU version 9.0.0 released

We’d like to announce the availability of the QEMU 9.0.0 release. This release contains 2700+ commits from 220 authors.

You can grab the tarball from our download page. The full list of changes are available in the changelog.

Highlights include:

- block: virtio-blk now supports multiqueue where different queues of a single disk can be processed by different I/O threads

- gdbstub: various improvements such as catching syscalls in user-mode, support for fork-follow modes, and support for siginfo:read

- memory: preallocation of memory backends can now be handled concurrently using multiple threads in some cases

- migration: support for “mapped-ram” capability allowing for more efficient VM snapshots, improved support for zero-page detection, and checkpoint-restart support for VFIO

- ARM: architectural feature support for ECV (Enhanced Counter Virtualization), NV (Nested Virtualization), and NV2 (Enhanced Nested Virtualization)

- ARM: board support for B-L475E-IOT01A IoT node, mp3-an536 (MPS3 dev board + AN536 firmware), and raspi4b (Raspberry Pi 4 Model B)

- ARM: additional IO/disk/USB/SPI/ethernet controller and timer support for Freescale i.MX6, Allwinner R40, Banana Pi, npcm7xxx, and virt boards

- HPPA: numerous bug fixes and SeaBIOS-hppa firmware updated to version 16

- LoongArch: KVM acceleration support, including LSX/LASX vector extensions

- RISC-V: ISA/extension support for Zacas, amocas, RVA22 profiles, Zaamo, Zalrsc, Ztso, and more

- RISC-V: SMBIOS support for RISC-V virt machine, ACPI support for SRAT, SLIT, AIA, PLIC and updated RHCT table support, and numerous fixes

- s390x: Emulation support for CVDG, CVB, CVBY and CVBG instructions, and fixes for LAE (Load Address Extended) emulation

- and lots more…

Thank you to everybody who contributed to this release, whether that was by writing code, reporting bugs, improving documentation, testing, or providing the project with CI resources. We couldn’t do these without you!

April 16, 2024

Marcin Juszkiewicz

ConfigurationManager in EDK2: just say no

During my work on SBSA Reference Platform I have spent lot of time in firmware’s code. Which mostly meant Tianocore EDK2 as Trusted Firmware is quite small.

Writing all those ACPI tables by hand takes time. So I checked ConfigurationManager component which can do it for me.

Introduction

In 2018 Sami Mujawar from Arm contributed Dynamic Tables Framework to Tianocore EDK2 project. The goal was to have code which generates all ACPI tables from all those data structs describing hardware which EDK2 already has.

In 2023 I was writing code for IORT and GTDT tables to generate them from C. And started wondering about use of ConfigurationManager.

Mailed edk2-devel ML for pointers, documentation, hints. Got nothing in return, idea went to the shelf.

SBSA-Ref and multiple PCI Express buses

Last week I got SBSA-Ref system booting in NUMA configuration with three separate PCI Express buses. And started working on getting EDK2 firmware to recognize them as such.

Took me a day and pci command listed cards properly:

Shell> pci

Seg Bus Dev Func

--- --- --- ----

00 00 00 00 ==> Bridge Device - Host/PCI bridge

Vendor 1B36 Device 0008 Prog Interface 0

00 00 01 00 ==> Network Controller - Ethernet controller

Vendor 8086 Device 10D3 Prog Interface 0

00 00 02 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 00 03 00 ==> Bridge Device - Host/PCI bridge

Vendor 1B36 Device 000B Prog Interface 0

00 00 04 00 ==> Bridge Device - Host/PCI bridge

Vendor 1B36 Device 000B Prog Interface 0

00 01 00 00 ==> Mass Storage Controller - Non-volatile memory subsystem

Vendor 1B36 Device 0010 Prog Interface 2

00 40 00 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 40 01 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 41 00 00 ==> Base System Peripherals - SD Host controller

Vendor 1B36 Device 0007 Prog Interface 1

00 42 00 00 ==> Display Controller - Other display controller

Vendor 1234 Device 1111 Prog Interface 0

00 80 00 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000E Prog Interface 0

00 80 01 00 ==> Bridge Device - PCI/PCI bridge

Vendor 1B36 Device 000C Prog Interface 0

00 81 09 00 ==> Multimedia Device - Audio device

Vendor 1274 Device 5000 Prog Interface 0

00 81 10 00 ==> Network Controller - Ethernet controller

Vendor 8086 Device 100E Prog Interface 0

00 82 00 00 ==> Mass Storage Controller - Serial ATA controller

Vendor 8086 Device 2922 Prog Interface 1

Three buses are: 0x00, 0x40 and 0x80. But then I had to tell Operating System about those. Which meant playing with ACPI tables code in C.

So idea came “what about trying ConfigurationManager?”.

Another try

Mailed edk2-devel ML again for pointers, documentation hints. And then looked at code written for N1SDP and started playing with ConfigurationManager…

ConfigurationManager.c has EDKII_PLATFORM_REPOSITORY_INFO struct with hundreds of lines of data (as another structs). From listing which ACPI tables I want to have (FADT, GTDT, APIC, SPCR, DBG2, IORT, MCFG, SRAT, DSDT, PPTT etc.) to listing all hardware details like GIC, PCIe, Timers, CPU and Memory information.

Then code for querying this struct. I thought that CM/DT (ConfigurationManager/DynamicTables) framework will have those already in EDK2 code but no — each platform has own set of functions. Another hundreds of lines to maintain.

Took some time to get it built, then started filling proper data and compared with ACPI tables I had previously. There were differences to sort out. But digging more and more into code I saw that I go deeper and deeper into rabbit hole…

Dynamic systems do not fit CM?

For platforms with dynamic hardware configuration (like SBSA-Ref) I needed to write code which would populate that struct with data on runtime. Check amount of cpu cores and write cpu information (with topology, cache etc), create all GIC structures and mappings. Then same for PCIe buses. Etc. Etc. etc…

STATIC

EFI_STATUS

EFIAPI

InitializePlatformRepository (

IN EDKII_PLATFORM_REPOSITORY_INFO * CONST PlatRepoInfo

)

{

GicInfo GicInfo;

CM_ARM_GIC_REDIST_INFO *GicRedistInfo;

CM_ARM_GIC_ITS_INFO *GicItsInfo;

CM_ARM_SMMUV3_NODE *SmmuV3Info;

GetGicDetails(&GicInfo);

PlatRepoInfo->GicDInfo.PhysicalBaseAddress = GicInfo.DistributorBase;

GicRedistInfo = &PlatRepoInfo->GicRedistInfo[0];

GicRedistInfo->DiscoveryRangeBaseAddress = GicInfo.RedistributorsBase;

GicItsInfo = &PlatRepoInfo->GicItsInfo[0];

GicItsInfo->PhysicalBaseAddress = GicInfo.ItsBase;

SmmuV3Info = &PlatRepoInfo->SmmuV3Info[0];

SmmuV3Info->BaseAddress = PcdGet64 (PcdSmmuBase);

return EFI_SUCCESS;

}

Which in my case can mean even more code written to populate CM struct of structs than it would take to generate ACPI tables by hand.

Summary

ConfigurationManager and DynamicTables frameworks look tempting. There may be systems where it can be used with success. I know that I do not want to touch it again. All those structs of structs may look good for someone familiar with LISP or JSON but not for me.

April 04, 2024

Marcin Juszkiewicz

DT-free EDK2 on SBSA Reference Platform

During last weeks we worked on getting rid of DeviceTree from EDK2 on SBSA Reference Platform. And finally we managed!

All code is merged into upstream EDK2 repository.

What?

Someone may wonder where DeviceTree was in SBSA Reference Platform. Wasn’t it UEFI and ACPI platform?

Yes, from Operating System point of view it is UEFI and ACPI. But if you look deeper you will see DeviceTree hidden inside our chain of software components:

/dts-v1/;

/ {

machine-version-minor = <0x03>;

machine-version-major = <0x00>;

#size-cells = <0x02>;

#address-cells = <0x02>;

compatible = "linux,sbsa-ref";

chosen {

};

memory@10000000000 {

reg = <0x100 0x00 0x00 0x40000000>;

device_type = "memory";

};

intc {

reg = <0x00 0x40060000 0x00 0x10000

0x00 0x40080000 0x00 0x4000000>;

its {

reg = <0x00 0x44081000 0x00 0x20000>;

};

};

cpus {

#size-cells = <0x00>;

#address-cells = <0x02>;

cpu@0 {

reg = <0x00 0x00>;

};

cpu@1 {

reg = <0x00 0x01>;

};

cpu@2 {

reg = <0x00 0x02>;

};

cpu@3 {

reg = <0x00 0x03>;

};

};

};

It is very minimal one, providing us with only those information we need. It does not even pass any compliance checks. For example for Linux, GIC node (/intc/ one) should have gazillion of fields, but we only need addresses.

Trusted Firmware reads it, parses and provides information from it via Secure Monitor Calls (SMC) to upper firmware level (EDK2 in our case). DeviceTree is provided too but we do not read it any more.

Why?

Our goal is to treat software components a bit different then people may expect. QEMU is “virtual hardware” layer, TF-A provides interface to “embedded controller” (EC) layer and EDK2 is firmware layer on top.

On physical hardware firmware assumes some parts and asks EC for the rest of system information. QEMU does not give us that, while giving us a way to alter system configuration more than it would be possible on most of hardware platforms using a bunch of cli arguments.

EDK2 asks for CPU, GIC and Memory. When there is no info about processors or memory, it informs the user and shutdowns the system (such situation does not have a chance of happening but it works as an example).

Bonus stuff: NUMA

Bonus part of this work was adding firmware support for NUMA configuration. When QEMU is run with NUMA arguments then operating system gets whole memory and proper configuration information.

QEMU arguments used:

-smp 4,sockets=4,maxcpus=4

-m 4G,slots=2,maxmem=5G

-object memory-backend-ram,size=1G,id=m0

-object memory-backend-ram,size=3G,id=m1

-numa node,nodeid=0,cpus=0-1,memdev=m0

-numa node,nodeid=1,cpus=2,memdev=m1

-numa node,nodeid=2,cpus=3

How Operating System sees NUMA information:

root@sbsa-ref:~# numactl --hardware

available: 3 nodes (0-2)

node 0 cpus: 0 1

node 0 size: 975 MB

node 0 free: 840 MB

node 1 cpus: 2

node 1 size: 2950 MB

node 1 free: 2909 MB

node 2 cpus: 3

node 2 size: 0 MB

node 2 free: 0 MB

node distances:

node 0 1 2

0: 10 20 20

1: 20 10 20

2: 20 20 10

What next?

There is CPU topology information in review queue. All those sockets, clusters, cores and threads. QEMU will pass it in DeviceTree, TF-A will give it via SMC and then EDK2 will put it in one of ACPI tables (PPTT == Processor Properties Topology Table).

If someone decide to write own firmware for SBSA Reference Platform (like port of U-Boot) then both DeviceTree and set of SMC calls will wait for them, ready to be used to gather hardware information.

March 31, 2024

Stefan Hajnoczi

Where are the Supply Chain Safe Programming Languages?

Programming languages currently offer few defences against supply chain attacks where a malicious third-party library compromises a program. As I write this, the open source community is trying to figure out the details of the xz-utils backdoor, but there is a long history of supply chain attacks. High profile incidents have made plain the danger of shipping software built from large numbers dependencies, many of them unaudited and under little scrutiny for malicious code. In this post I will share ideas on future supply chain safe programming languages.

Supply Chain Safe Programming Languages?

I'm using the term Supply Chain Safe Programming Languages for languages that defend against supply chain attacks and allow library dependencies to be introduced with strong guarantees about what the dependencies can and cannot do. This type of programming language is not yet widely available as of March 2024, to the best of my knowledge.

Supply chain safety is often associated with software packaging and distribution techniques for verifying that software was built from known good inputs. Although adding supply chain safety tools on top of existing programming languages is a pragmatic solution, I think future progress requires addressing supply chain safety directly in the programming language.

Why today's languages are not supply chain safe

Many existing languages have a module system that gives the programmer control over the visibility of variables and functions. By hiding variable and functions from other modules, one might hope to achieve isolation so that a component like a decompression library could not read a sensitive variable from the program. Unfortunately this level of isolation between components is not really available in popular programming languages today even in languages with public/private visibility features. Visibility is more of a software engineering tool for keeping programs decoupled than an isolation mechanism that actually protects components of a program from each other. There are many ways to bypass visibility.

The fundamental problem is that existing programming languages do not even acknowledge that programs often consist of untrusted components. Compilers and interpreters currently treat the entire input source code as having more or less the same level of trust. Here are some of the ways in which today's programming languages fall short:

- Unsafe programming languages like C, C++, and even Rust allow the programmer to bypass the type system to do pretty much anything.

- Dynamic languages like Python and JavaScript have introspection and monkey patching abilities that allow the programmer to hook other parts of the program and escape attempts at isolation.

- Build systems and metaprogramming facilities like macros allow untrusted components to generate code that executes in the context of another component.

- Standard libraries provide access to spawning new programs, remapping virtual memory, loading shared libraries written in unsafe languages, hijacking function calls through the linker, raw access to the program's memory space with /proc/self/mem, and so on. All of these can bypass language-level mechanisms for isolating components in a program.

Whatever your current language, it's unlikely that the language itself allows you to isolate components of a program. The best approach we have today for run-time isolation is through sandboxing. Examples of sandboxing approaches include seccomp(2), v8 Isolates for JavaScript, invoking untrusted code in a WebAssembly runtime, or the descendents of chroot(2).

Sandboxes are not supported directly by the programming language and have a number of drawbacks and limitations. Integrating sandboxing into programs is tedious so they are primarily used in the most critical attack surfaces like web browsers or hypervisors. There is usually a performance overhead associated with interacting with the sandbox because data needs to be marshalled or copied. Sandboxing is an opt-in mechanism that doesn't raise the bar of software in general. I believe that supply chain safe programming languages could offer similar isolation but as the default for most software.

What a Supply Chain Safe Programming Language looks like

The goal of a supply chain safe programming language is to isolate components of a program by default. Rather than leaving supply chain safety outside the scope of the language, the language should allow components to be integrated with strong guarantees about what effects they can have on each other. There may be practical reasons to offer an escape hatch to unsafe behavior, but the default needs to be safe.

At what level of granularity should isolation operate? I think modules are too coarse grained because they are often collections of functions that perform very different types of computation requiring different levels of access to resources. The level of granularity should at least go down to the function level within a component, although even achieving module-level granularity would be a major improvement over today's standards.

An example is that a hash table lookup function should be unable to connect to the internet. That way the function can be used without fear of it becoming a liability if it contains bugs or its source code is manipulated by an attacker.

A well-known problem in programming language security is that the majority of languages expose ambient capabilities to all components in a program. Ambient capabilities provide access to resources that are not explicitly passed in to the component. Think of a file descriptor in a POSIX process that is available to any function in the program, including a string compare function that has no business manipulating file descriptors.

Capability-based security approaches are a solution to the ambient capabilities problem in languages today. Although mainstream programming languages do not offer capabilities as part of the language, there have been special-purpose and research languages that demonstrated that this approach works. In a type safe programming language with capability-based security it becomes possible to give components access to only those resources that they require. Usually type safety is the mechanism that prevents capabilities from being created out of thin air, although other approaches may be possible for dynamic languages. The type system will not allow a component to create itself a new capability that the component does not already possess.

Capability-based security addresses safety at runtime, but it does not address safety at compile time. If we want to compose programs from untrusted components then it is not possible to rely on today's build scripts, code generators, or macro systems. The problem is that they can be abused by a component to execute code in the context of another component.

Compile-time supply chain safety means isolating components so their code stays within their component. For example, a "leftpad" macro that pads a string literal with leading spaces would be unsafe if it can generate code that is compiled as part of the main program using the macro. Similarly, a build script for the leftpad module must not be able to affect or escape the build environment.

Macros, build scripts, code generators, and so on are powerful tools that programmers find valuable. The challenge for supply chain safe programming languages is to harness that power so that it remains convenient to use without endangering safety. One example solution is running build scripts in an isolated environment that cannot affect other components in the program. This way a component can take advantage of custom build-time behavior without endangering the program. However, it is unclear to me how far inter-component facilities like macros can be made safe, if at all.

Conclusion

I don't have the answers or even a prototype, but I think supply chain safe programming languages are an inevitability. Modern programs are composed of many third-party components yet we do not have effective techniques for confining components. Languages treat the entire program as trusted rather than as separate untrusted components that must be isolated.

Hopefully we will begin to see new mainstream programming languages emerge that are supply chain safe, not just memory safe!

March 25, 2024

Marcin Juszkiewicz

Running SBSA Reference Platform

Recently people asked me how to run SBSA Reference Platform for their own testing and development. Which shows that I should write some documentation.

But first let me blog about it…

Requirements

To run SBSA Reference Platform emulation you need:

- QEMU (8.2+ recommended)

- EDK2 firmware files

That’s all. Sure, some hardware resources would be handy but everyone has some kind of computer available, right?

QEMU

Nothing special is required as long as you have qemu-system-aarch64 binary available.

EDK2

We provide EDK2 binaries on CodeLinaro server. Go to “latest/edk2” directory, fetch both “SBSA_FLASH*” files, unpack them and you are ready to go. You may compare checksums (before unpacking) with values present in “latest/README.txt” file.

Those binaries are built from release versions of Trusted Firmware (TF-A) and Tianocore EDK2 plus latest “edk2-platforms” code (as this repo is not using tags).

Building EDK2

If you decide to build EDK2 on your own then we provide TF-A binaries in “edk2-non-osi” repository. I update those when it is needed.

Instructions to build EDK2 are provided in Qemu/SbsaQemu directory of “edk2-platforms” repository.

Running SBSA Reference Platform emulation

Note that this machine is fully emulated. Even on AArch64 systems where virtualization is available.

Let go through example QEMU command line:

qemu-system-aarch64

-machine sbsa-ref

-drive file=firmware/SBSA_FLASH0.fd,format=raw,if=pflash

-drive file=firmware/SBSA_FLASH1.fd,format=raw,if=pflash

-serial stdio

-device usb-kbd

-device usb-tablet

-cdrom disks/alpine-standard-3.19.1-aarch64.iso

At first we select “sbsa-ref” machine (defaults to four Neoverse-N1 cpu cores and 1GB of ram). Then we point to firmware files (order of them is important).

Serial console is useful for diagnostic output, just remember to not press Ctrl-C there unless you want to take whole emulation down.

USB devices are to have working keyboard and pointing device. USB tablet is more

useful that USB mouse (-device usb-mouse adds it). If you want to run *BSD

operating systems then I recommend to add USB mouse.

And the last entry adds Alpine 3.19.1 ISO image.

System boots to text console on graphical output. For some reason boot console is on serial port.

Adding hard disk

If you want to add hard disk then adding “-hdb disk.img” is enough (“hdb” as

cdrom took 1st slot on the AHCI controller).

Handy thing is “virtual FAT drive” which allows to create guest’s drive from directory on the host:

-drive if=ide,file=fat:ro:DIRECTORY_ON_HOST,format=raw

This is useful for running EFI binaries as this drive is visible in UEFI environment. It is not present when operating system is booted.

Adding NVME drive

NVME is composed from two things:

- PCIe device

- storage drive

So let add it in a nice way, using PCIe root-port:

-device pcie-root-port,id=root_port_for_nvme1,chassis=2,slot=0

-device nvme,serial=deadbeef,bus=root_port_for_nvme1,drive=nvme

-drive file=disks/nvme.img,format=raw,id=nvme,if=none

Using NUMA configuration

QEMU can emulate Non-Uniform Memory Access (NUMA) setup. This usually means multisocket systems with memory available per cpu socket.

Example config:

-smp 4,sockets=4,maxcpus=4

-m 4G,slots=2,maxmem=5G

-object memory-backend-ram,size=1G,id=m0

-object memory-backend-ram,size=3G,id=m1

-numa node,nodeid=0,cpus=0-1,memdev=m0

-numa node,nodeid=1,cpus=2,memdev=m1

-numa node,nodeid=2,cpus=3

This adds four cpu sockets and 4GB of memory. First node has 2 cpu cores and 1GB ram, second node has 1 cpu and 3GB of ram and last node has 1 cpu without local memory.

Note that support for such setup in work in progress now (March 2024). We merged required code into TF-A and have set of patches for EDK2 in review. Without them you will see resources only from the first NUMA node.

Complex PCI Express setup

Our platform has GIC ITS support so we can try some complex PCI Express structures.

This example uses PCIe switch to add more PCIe slots and then (to complicate things) puts PCIe-to-PCI bridge into one of them to make use of old Intel e1000 network card:

-device pcie-root-port,id=root_port_for_switch1,chassis=0,slot=0

-device x3130-upstream,id=up_port1,bus=root_port_for_switch1

-device xio3130-downstream,id=down_port1,bus=up_port1,chassis=1,slot=0

-device ac97,bus=down_port1

-device xio3130-downstream,id=down_port2,bus=up_port1,chassis=1,slot=1

-device pcie-pci-bridge,id=pci1,bus=down_port2

-device e1000,bus=pci1,addr=2

Some helper scripts

During last year I wrote some helper scripts for working with SBSA Reference Platform testing. They are stored in sbsa-ref-status repository on GitHub.

May lack up-to-date documentation but can show my way of using the platform.

Summary

SBSA Reference Platform can be used for testing several things. From operating systems to (S)BSA compliance of the platform. Or to check how some things are emulated in QEMU. Or playing with PCIe setups (NUMA systems can have separate PCI Express buses but we do not handle it yet in firmware).

Have fun!

KVM on Z

Important Note on Verifying Secure Execution Host Key Documents

The certificates of the host key signing keys that are needed to verify host key documents will expire on April 24, 2024 for IBM z15 and LinuxONE III and on March 29, 2024 for IBM z16 and LinuxONE 4. Due to a requirement from the Certificate Authority (DigiCert), the renewed certificates are equipped with a new Locality value (“Armonk” instead of “Poughkeepsie”). These renewed certificates cause the current versions of the genprotimg, pvattest, and pvsecret tools to fail the verification of host key documents.

The IBM Z team is preparing updates of the genprotimg, pvattest, and pvsecret tools to accept the new certificates and is working with Linux distribution partners to release the updated tools.

To

build new Secure Execution images, attestation requests, or

add-secret requests before the updated tools are available in Linux

distributions, follow these steps:

Step 1:

Obtain the host key document, the host key signing key certificate, the intermediate certificate from the Certificate Authority, and the list of revoked host keys (CRL):

For IBM z15 and LinuxONE III, see https://www.ibm.com/support/resourcelink/api/content/public/secure-execution-gen1.html

For IBM z16 and LinuxONE 4, see https://www.ibm.com/support/resourcelink/api/content/public/secure-execution-gen2.html

Step 2:

Step 3:

Verify each host key document using the check_hostkeydoc script. For example, issue

# ./check_hostkeydoc HKD1234.crt ibm-z-host-key-signing.crt \ -c DigiCertCA.crt -r ibm-z-host-key.crl

This example verifies the host key document HKD1234.crt using the host key signing key certificate ibm-z-host-key-signing.crt, and the intermediate certificate of the Certificate Authority DigiCertCA.crt,as well as the list of revoked host keys ibm-z-host-key.crl.

After the host key documents are verified using the check_hostkeydoc script, you can safely call genprotimg, pvattest, or pvsecret with the –-no-verify option.

For a description about how to manually verify host key documents, see https://www.ibm.com/docs/en/linux-on-z?topic=execution-verify-host-key-document.

March 12, 2024

Stefan Hajnoczi

How to access libvirt domains in KubeVirt

KubeVirt makes it possible to run virtual machines on Kubernetes alongside container workloads. Virtual machines are configured using VirtualMachineInstance YAML. But under the hood of KubeVirt lies the same libvirt tooling that is commonly used to run KVM virtual machines on Linux. Accessing libvirt can be convenient for development and troubleshooting.

Note that bypassing KubeVirt must be done carefully. Doing this in production may interfere with running VMs. If a feature is missing from KubeVirt, then please request it.

The following diagram shows how the user's VirtualMachineInstance is turned into a libvirt domain:

Accessing virsh

Libvirt's virsh command-line tool is available inside the virt-launcher Pod that runs a virtual machine. First determine vm1's virt-launcher Pod name by filtering on its label (thanks to Alice Frosi for this trick!):

$ kubectl get pod -l vm.kubevirt.io/name=vm1 NAME READY STATUS RESTARTS AGE virt-launcher-vm1-5gxvg 2/2 Running 0 8m13s

Find the name of the libvirt domain (this is guessable but it doesn't hurt to check):

$ kubectl exec virt-launcher-vm1-5gxvg -- virsh list Id Name State ----------------------------- 1 default_vm1 running

Arbitrary virsh commands can be invoked. Here is an example of dumping the libvirt domain XML:

$ kubectl exec virt-launcher-vm1-5gxvg -- virsh dumpxml default_vm1 <domain type='kvm' id='1'> <name>default_vm1</name> ...

Viewing libvirt logs and full the QEMU command-line

The libvirt logs are captured by Kubernetes so you can view them with kubectl log <virt-launcher-pod-name>. If you don't know the virt-launcher pod name, check with kubectl get pod and look for your virtual machine's name.

The full QEMU command-line is part of the libvirt logs, but unescaping the JSON string is inconvenient. Here is another way to get the full QEMU command-line:

$ kubectl exec <virt-launcher-pod-name> -- ps aux | grep qemu

Customizing KubeVirt's libvirt domain XML

KubeVirt has a feature for customizing libvirt domain XML called hook sidecars. After the libvirt XML is generated, it is sent to a user-defined container that processes the XML and returns it back. The libvirt domain is defined using this processed XML. To learn more about how it works, check out the documentation.

Hook sidecars are available when the Sidecar feature gate is enabled in the kubevirt/kubevirt custom resource. Normally only the cluster administrator can modify the kubevirt CR, so be sure to check when trying this feature:

$ kubectl auth can-i update kubevirt/kubevirt -n kubevirt yes

Although you can provide a complete container image for the hook sidecar, there is a shortcut if you just want to run a script. A generic hook sidecar image is available that launches a script which can be provided as a ConfigMap. Here is example YAML including a ConfigMap that I've used to test the libvirt IOThread Virtqueue Mapping feature:

---

apiVersion: kubevirt.io/v1

kind: KubeVirt

metadata:

name: kubevirt

namespace: kubevirt

spec:

configuration:

developerConfiguration:

featureGates:

- Sidecar

---

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataVolume

metadata:

name: "fedora"

spec:

storage:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

source:

http:

url: "https://download.fedoraproject.org/pub/fedora/linux/releases/38/Cloud/x86_64/images/Fedora-Cloud-Base-38-1.6.x86_64.raw.xz"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: sidecar-script

data:

my_script.sh: |

#!/usr/bin/env python3

import xml.etree.ElementTree as ET

import os.path

import sys

NUM_IOTHREADS = 4

VOLUME_NAME = 'data' # VirtualMachine volume name

def main(xml):

domain = ET.fromstring(xml)

domain.find('iothreads').text = str(NUM_IOTHREADS)

disk = domain.find(f"./devices/disk/alias[@name='ua-{VOLUME_NAME}']..")

driver = disk.find('driver')

del driver.attrib['iothread']

iothreads = ET.SubElement(driver, 'iothreads')

for i in range(NUM_IOTHREADS):

iothread = ET.SubElement(iothreads, 'iothread')

iothread.set('id', str(i + 1))

ET.dump(domain)

if __name__ == "__main__":

# Workaround for https://github.com/kubevirt/kubevirt/issues/11276

if os.path.exists('/tmp/ran-once'):

main(sys.argv[4])

else:

open('/tmp/ran-once', 'wb')

print(sys.argv[4])

---

apiVersion: kubevirt.io/v1

kind: VirtualMachineInstance

metadata:

creationTimestamp: 2018-07-04T15:03:08Z

generation: 1

labels:

kubevirt.io/os: linux

name: vm1

annotations:

hooks.kubevirt.io/hookSidecars: '[{"args": ["--version", "v1alpha3"],

"image": "kubevirt/sidecar-shim:20240108_99b6c4bdb",

"configMap": {"name": "sidecar-script",

"key": "my_script.sh",

"hookPath": "/usr/bin/onDefineDomain"}}]'

spec:

domain:

ioThreadsPolicy: auto

cpu:

cores: 8

devices:

blockMultiQueue: true

disks:

- disk:

bus: virtio

name: disk0

- disk:

bus: virtio

name: data

machine:

type: q35

resources:

requests:

memory: 1024M

volumes:

- name: disk0

persistentVolumeClaim:

claimName: fedora

- name: data

emptyDisk:

capacity: 8Gi

If you need to go down one level further and customize the QEMU command-line, see my post on passing QEMU command-line options in libvirt domain XML.

More KubeVirt debugging tricks

The official KubeVirt documentation has a Virtualization Debugging section with more tricks for customizing libvirt logging, launching QEMU with strace or gdb, etc. Thanks to Alice Frosi for sharing the link!

Conclusion

It is possible to get libvirt access in KubeVirt for development and testing. This can make troubleshooting easier and it gives you the full range of libvirt domain XML if you want to experiment with features that are not yet exposed by KubeVirt.

February 12, 2024

Stefano Garzarella

vDPA: support for block devices in Linux and QEMU

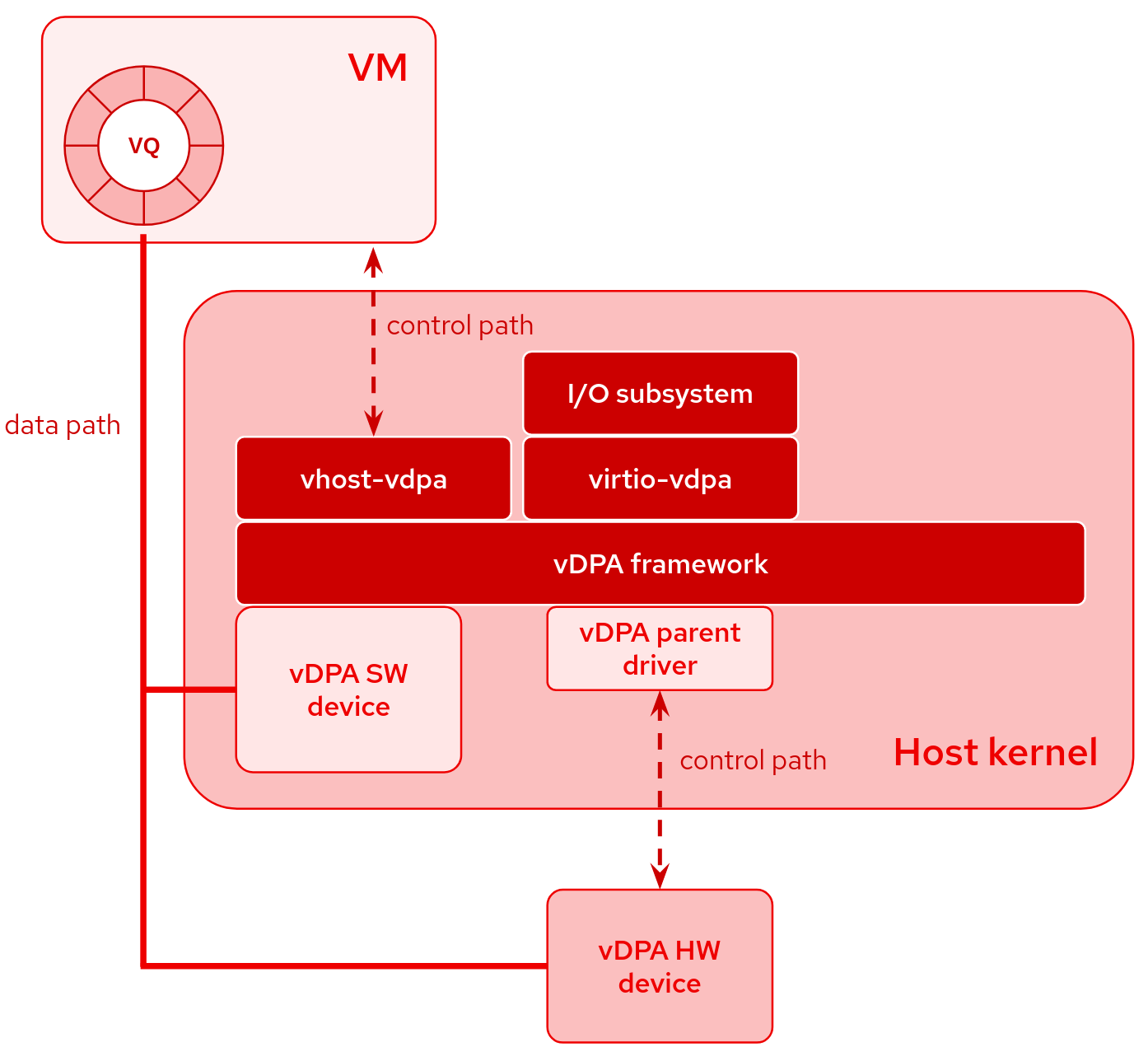

A vDPA device is a type of device that follows the virtio specification for its datapath but has a vendor-specific control path.

vDPA devices can be both physically located on the hardware or emulated by software.

A small vDPA parent driver in the host kernel is required only for the control path. The main advantage is the unified software stack for all vDPA devices:

- vhost interface (vhost-vdpa) for userspace or guest virtio driver, like a VM running in QEMU

- virtio interface (virtio-vdpa) for bare-metal or containerized applications running in the host

- management interface (vdpa netlink) for instantiating devices and configuring virtio parameters

Useful Resources

Many blog posts and talks have been published in recent years that can help you better understand vDPA and the use cases. On vdpa-dev.gitlab.io we collected some of them; I suggest you at least explore the following:

Block devices

Most of the work in vDPA has been driven by network devices, but in recent years, we have also developed support for block devices.

The main use case is definitely leveraging the hardware to directly emulate the virtio-blk device and support different network backends such as Ceph RBD or iSCSI. This is the goal of some SmartNICs or DPUs, which are able to emulate virtio-net devices of course, but also virtio-blk for network storage.

The abstraction provided by vDPA also makes software accelerators possible, similar to existing vhost or vhost-user devices. We discussed about that at KVM Forum 2021.

We talked about the fast path and the slow path in that talk. When QEMU needs to handle requests, like supporting live migration or executing I/O throttling, it uses the slow path. During the slow path, the device exposed to the guest is emulated in QEMU. QEMU intercepts the requests and forwards them to the vDPA device by taking advantage of the driver implemented in libblkio. On the other hand, when QEMU doesn’t need to intervene, the fast path comes into play. In this case, the vDPA device can be directly exposed to the guest, bypassing QEMU’s emulation.

libblkio exposes common API for accessing

block devices in userspace. It supports several drivers. We will focus more

on virtio-blk-vhost-vdpa driver, which is used by virtio-blk-vhost-vdpa

block device in QEMU. It only supports slow path for now, but in the future

it should be able to switch to fast path automatically. Since QEMU 7.2, it

supports libblkio drivers, so you can use the following options to attach a

vDPA block device to a VM:

-blockdev node-name=drive_src1,driver=virtio-blk-vhost-vdpa,path=/dev/vhost-vdpa-0,cache.direct=on \

-device virtio-blk-pci,id=src1,bootindex=2,drive=drive_src1 \

Anyway, to fully leverage the performance of a vDPA hardware device, we can

always use the generic vhost-vdpa-device-pci device offered by QEMU that

supports any vDPA device and exposes it directly to the guest. Of course,

QEMU is not able to intercept requests in this scenario and therefore some

features offered by its block layer (e.g. live migration, disk format, etc.)

are not supported. Since QEMU 8.0, you can use the following options to attach

a generic vDPA device to a VM:

-device vhost-vdpa-device-pci,vhostdev=/dev/vhost-vdpa-0

At KVM Forum 2022, Alberto Faria and Stefan Hajnoczi introduced libblkio, while Kevin Wolf and I discussed its usage in the QEMU Storage Deamon (QSD).

Software devices

One of the significant benefits of vDPA is its strong abstraction, enabling the implementation of virtio devices in both hardware and software—whether in the kernel or user space. This unification under a single framework, where devices appear identical for QEMU facilitates the seamless integration of hardware and software components.

Kernel devices

Regarding in-kernel devices, starting from Linux v5.13, there exists a simple

simulator designed for development and debugging purposes. It is available

through the vdpa-sim-blk kernel module, which emulates a 128 MB ramdisk.

As highlighted in the presentation at KVM Forum 2021, a future device in the

kernel (similar to the repeatedly proposed but never merged vhost-blk)

could potentially offer excellent performance. Such a device could be used

as an alternative when hardware is unavailable, for instance, facilitating

live migration in any system, regardless of whether the destination system

features a SmartNIC/DPU or not.

User space devices

Instead, regarding user space, we can use VDUSE. QSD supports it and thus allows us to export any disk image supported by QEMU, such as a vDPA device in this way:

qemu-storage-daemon \

--blockdev file,filename=/path/to/disk.qcow2,node-name=file \

--blockdev qcow2,file=file,node-name=qcow2 \

--export type=vduse-blk,id=vduse0,name=vduse0,node-name=qcow2,writable=on

Containers, VMs, or bare-metal

As mentioned in the introduction, vDPA supports different buses such as

vhost-vdpa and virtio-vdpa. This flexibility enables the utilization of

vDPA devices with virtual machines or user space drivers (e.g., libblkio)

through the vhost-vdpa bus. Additionally, it allows interaction with

applications running directly on the host or within containers via the

virtio-vdpa bus.

The vdpa tool in iproute2 facilitates the management of vdpa devices

through netlink, enabling the allocation and deallocation of these devices.

Starting with Linux 5.17, vDPA drivers support driver_ovveride. This

enhancement allows dynamic reconfiguration during runtime, permitting the

migration of a device from one bus to another in this way:

# load vdpa buses

$ modprobe -a virtio-vdpa vhost-vdpa

# load vdpa-blk in-kernel simulator

$ modprobe vdpa-sim-blk

# instantiate a new vdpasim_blk device called `vdpa0`

$ vdpa dev add mgmtdev vdpasim_blk name vdpa0